Reliability

Reliability is a statement about how reliable the information collected is. Four types of reliability can be distinguished. Usually a correlation coefficient is computed. A high value indicates a high degree of reliability.

To properly understand reliability, you can compare it to unreliability. Consider it the two extremes on a scale of 0 to 100 and you can assign reliability scores. A score of 0 means completely unreliable and a score of 100 means completely reliable.

Reliable people are people who always give 100% true and correct answers. They never lie. Because someone cannot know everything, a wrong answer will sometimes be given. Nobody will therefore always be able to provide 100% reliable information.

The same applies to tests and questionnaires. The scores on a test must be 100% reliable. But there is something impossible in it. The answer alternatives are often preprogramed. A question like "Are you happy?" can often only be answered on a five-point scale. An answer like 4.8 cannot be given. So the information obtained is not 100% reliable. Furthermore, it may be that the respondent is unhappy and should have entered a 2, but because he does not want to come across as unhappy, he enters a 4.

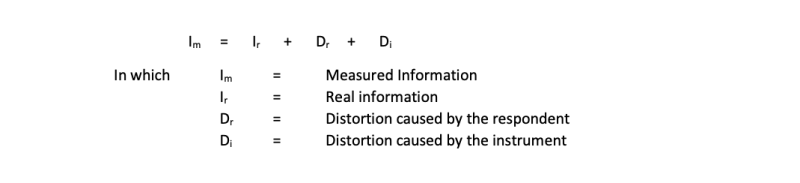

Two reasons for the unreliability of the scores can be identified. One is the unreliability of the respondent: incorrect answers are given. The other is the unreliability in the test or questionnaire: it is not possible to measure properly. The only certainty is the answer given. A good test is a test that minimizes both causes of unreliability. You can also display this in a formula:

The only thing that is known for sure is the score in the database. All other aspects are unknown. The goal of reliability is to make a statement about how much the measured information reflects the real information. The distortion of Dr and Di should be minimal.

Four ways of testing can be distinguished to determine the (un)reliability:

1) Test-retest reliability. This investigates if a person gives the same answers all the time on the same test.

2) Test-test reliability. This investigates if a similar test produces the same results.

3) Internal consistency. This investigates if a list of aspects measures one underlying construct.

4) Split half reliability. This examines whether the first half of the test gives a score that is equal to the second half, or whether the score on the even item numbers is equal to the score on the odd item numbers.

These terms are explained in more detail in sperate pages of this dictionary.

Final remarks about reliability

Please don’t mix up reliability with validity. They are two out of three aspects of measuring that are important for acquiring good data. The third aspect is discriminatory.

Though reliability is often about the quality of the data, it can be applied to the total research too. This page started with such a question. To extend reliability a little bit, also questions about the quality of the methodology should be answered. These questions are:

- Is the sample size big enough to get reliable results from the analysis?

- Is the response representative to make reliable statements about the population?

These aspects are better known with the terms internal and external validity. So it is no surprise that students mix up validity and reliability.

Related topics to reliability

- Test-retest reliability

- Test-test reliability

- Internal consistency (Cronbach´s alpha)

- Split half reliability

- Validity